Tech

The Data Protection Bill will stop misuse of customer data and punish offenders, says IT MoS

According to government sources, a new data protection measure will be presented to Parliament during its winter session.

Gadgets

OnePlus Freedom Sale 2026 brings discounts on 15, 15R, 13, Nord 5 and tablets

OnePlus Freedom Sale 2026 begins Jan 16 with discounts on phones, tablets, and audio products across online and offline stores.

Auto

Mahindra XUV 7XO launched in India at Rs 13.66 lakh with major upgrades

Mahindra has launched the XUV 7XO facelift in India at Rs 13.66 lakh, offering upgraded design, advanced technology, and smart connectivity features.

Tech

Google Meet outage disrupts work calls, online classes across India

Google Meet faced a major outage across India, stopping users from joining meetings and prompting widespread complaints on social media.

-

India News17 hours ago

India News17 hours agoDMK leader’s son arrested after car rams family in Krishnagiri, one dead

-

India News16 hours ago

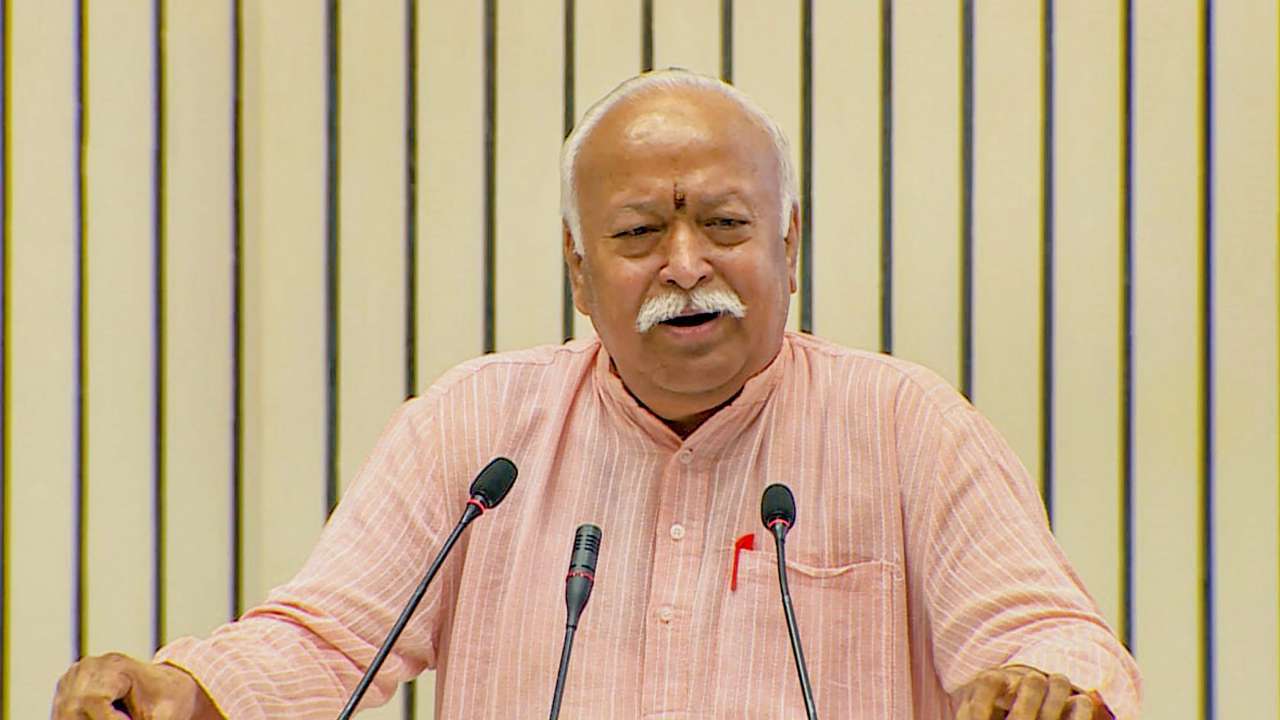

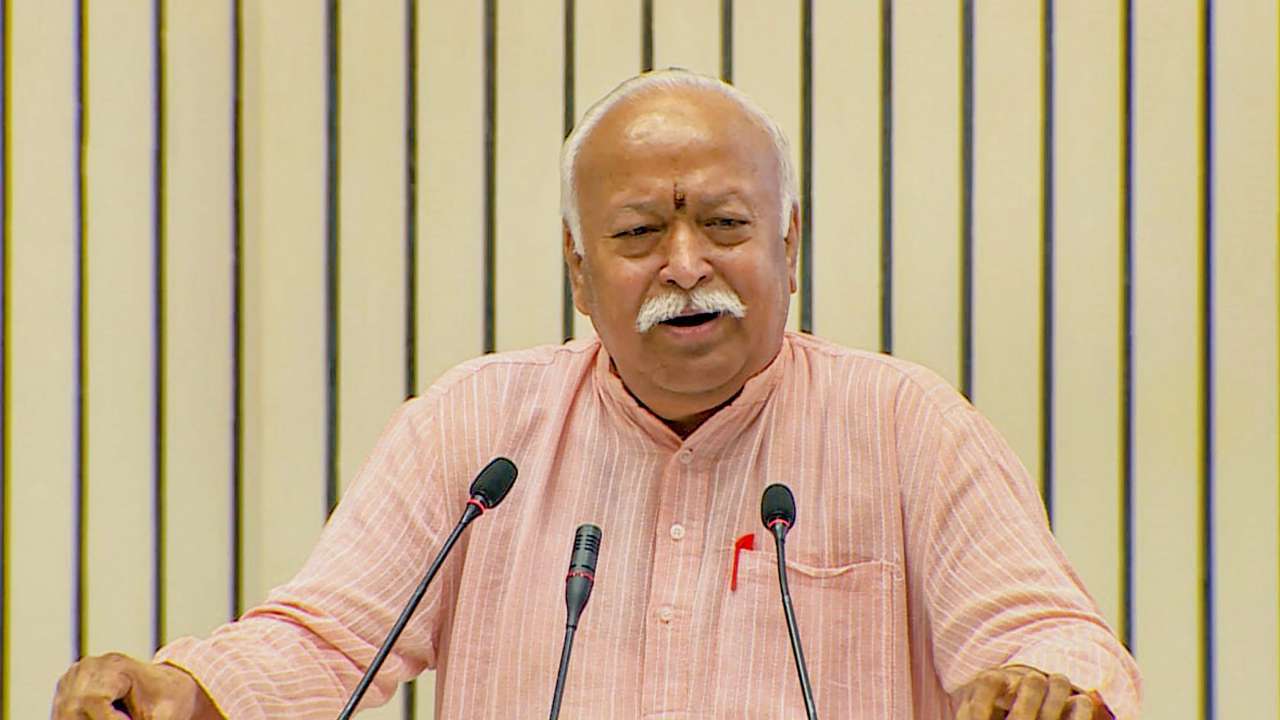

India News16 hours agoRSS chief backs nationwide rollout of Uniform Civil Code, cites Uttarakhand model

-

India News7 hours ago

India News7 hours agoAs stealth reshapes air combat, India weighs induction of Sukhoi Su-57 jets

-

Cricket news7 hours ago

Cricket news7 hours agoRinku Singh returns home from T20 World Cup camp due to family emergency

-

India News6 hours ago

India News6 hours agoTamil Nadu potboiler: Now, Sasikala to launch new party ahead of election